Zpět

Anotovaná literatura k PHD studiu

Content-Based Information Retrieval in general

Content-Based Information Retrieval in general

Image Retrieval

Image Retrieval

- Rui, Huang, Chang: Image Retrieval: Current Techniques, Promising Directions, and Open Issues. Journal of Visual Communication and Image Representation, Volume 10, Issue 1, 1999.

- Veltkamp, Tanase: Content-Based Image Retrieval Systems: A Survey. 2000

Smeulders, Worring, Santini, Gupta, Jain: Content-Based Image Retrieval at the End of the Early Years. 2000.

Smeulders, Worring, Santini, Gupta, Jain: Content-Based Image Retrieval at the End of the Early Years. 2000.- Datta, Li, Wang: Content-Based Image Retrieval - Approaches and Trends of the New Age. MIR’05

Datta, Joshi, Li, Wang: Image retrieval: Ideas, influences, and trends of the new age. ACM Computation Surveys, 2008.

Datta, Joshi, Li, Wang: Image retrieval: Ideas, influences, and trends of the new age. ACM Computation Surveys, 2008. Kherfi, Ziou, Bernardi: Image Retrieval From the World Wide Web: Issues, Techniques, and Systems. ACM computing surveys, 2004.

Kherfi, Ziou, Bernardi: Image Retrieval From the World Wide Web: Issues, Techniques, and Systems. ACM computing surveys, 2004.- Mueller, Deselaers: Image Retrieval. Tutorial TrebleCLEF Summer School 2009.

- Alemu, Koh, Ikram, Kim: Image Retrieval in Multimedia Databases: A Survey. Int. Conf. on Intelligent Information Hiding and Multimedia Signal Processing

- Enser: The evolution of visual information retrieval. Journal of Information Science, 2008. (plný text článku)

- Online book: Image Search Engines: An Overview

Semantic gap

Semantic gap

- Hare, Lewis, Enser, Sandom: Mind the Gap: Another look at the problem of the semantic gap in image retrieval. 2006

- Guan, Antani, Long, Thoma: Bridging the semantic gap using ranking svm for image retrieval. 2009

Liu, Zhang, Lu, Ma: A survey of content-based image retrieval with high-level semantics. 2006.

Liu, Zhang, Lu, Ma: A survey of content-based image retrieval with high-level semantics. 2006. Jain, Sinha: Content Without Context is Meaningless. MM 2010.

Jain, Sinha: Content Without Context is Meaningless. MM 2010.- Eickhoff, Li, de Vries: Exploiting User Comments for Audio-Visual Content Indexing and Retrieval. ECIR 2013

- Enser, Sandom: Towards a Comprehensive Survey of the Semantic Gap in Visual Image Retrieval. CIVR 2003: 291-299 (plný text článku)

- Enser, Sandom, Lewis: Surveying the Reality of Semantic Image Retrieval. VISUAL 2005: 177-188 (plný text článku)

User needs

User needs

- Armitage, Enser: Analysis of User Need in Image Archives. Journal of Information Science, 1997. Placené.

Existing image search systems

Existing image search systems

- IBM QBIC: Ashley, Flickner, Hafner, Lee, Niblack, Petkovic: The Query By Image Content (QBIC) System. SIGMOD 1995

- SIMPLIcity: Wang, Li, Wiederhold: SIMPLIcity: Semantics-Sensitive Integrated Matching for Picture LIbraries. IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 23, no. 9, pp. 947-963, 2001.

- Web-WISE: Wei, Li, Sethi: Web-WISE: compressed image retrieval over the web . Multimedia Information Analysis and Retrieval, 1998.

- Cortina: Quack, Monich, Thiele, Manjunath: Cortina: A System for Large-scale, Content-based Web Image Retrieval. ACM Multimedia 2004.

- MUFIN: Batko, Falchi, Lucchese, Novak, Perego, Rabitti, Sedmidubsky, Zezula: Building a web-scale image similarity search system. Multimedia Tools and Applications 2010.

- www.MMRetrieval.net: Zagoris, Arampatzis, Chatzichristofis: www.MMRetrieval.net: A Multimodal Search Engine. SISAP 2010.

Descriptors

Descriptors

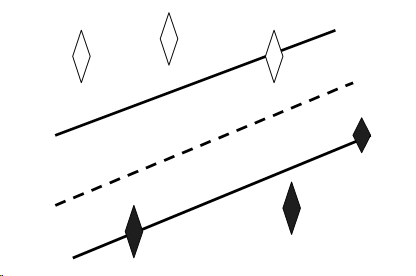

Modalities, similarity measures

Modalities, similarity measures

Indexing

Indexing

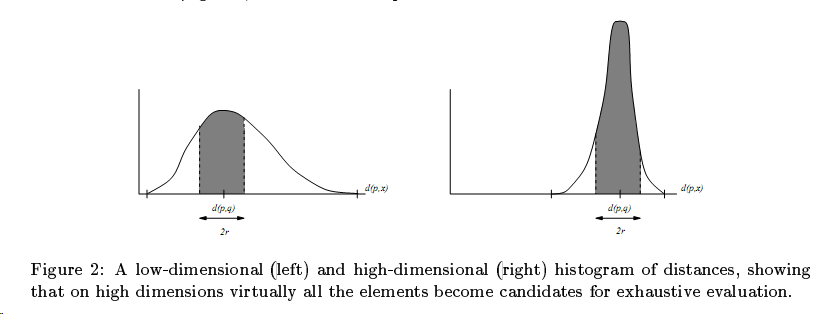

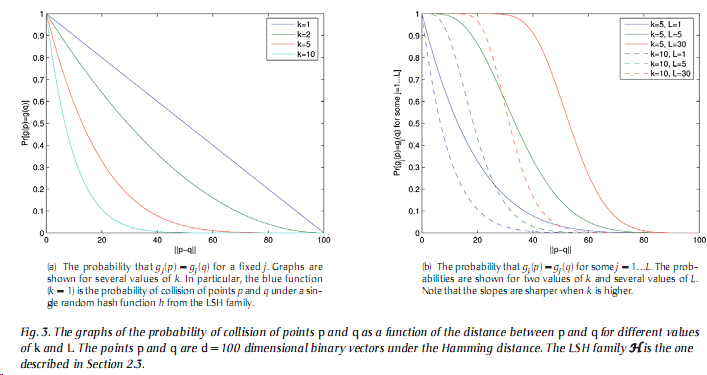

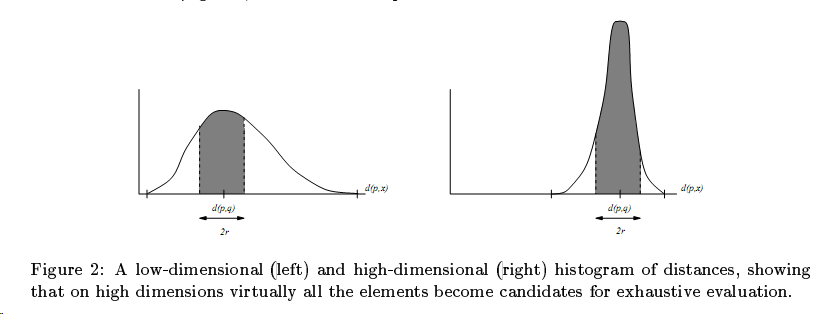

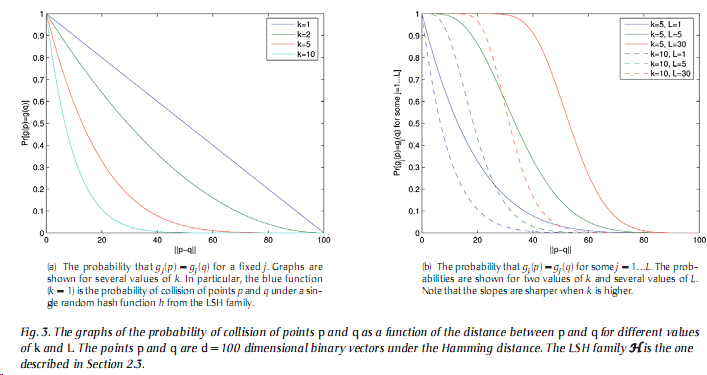

Hashing, LSH

Hashing, LSH

LSH: Theoretical papers

LSH: Theoretical papers

LSH: Theory + experiments

LSH: Theory + experiments

- Andoni, Indyk: Near-Optimal Hashing Algorithms for Approximate Nearest Neighbor in High Dimensions. COMMUNICATIONS OF THE ACM January 2008/Vol. 51, No. 1.

- Gionis, Indyk, Motwani: Similarity Search In High Dimensions via Hashing. VLDB'99.

- Lv, Josephson, Wang, Charikar, Li: Multi-Probe LSH: Efficient Indexing for High-Dimensional Similarity Search. VLDB'07.

- Dong, Wang, Josephson, Charikar, Li: Modeling LSH for Performance Tuning. CIKM'08.

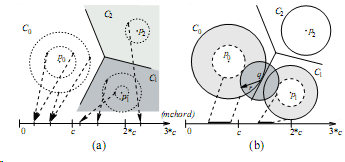

"LPT" (Locality Preserving Transformations) - LSH principles for general metric spaces

"LPT" (Locality Preserving Transformations) - LSH principles for general metric spaces

Query expansion

Query expansion

Natsev, Haubold, Tesic, Xie, Yan: Semantic concept-based query expansion and re-ranking for multimedia retrieval. ACM Multimedia 2007

Natsev, Haubold, Tesic, Xie, Yan: Semantic concept-based query expansion and re-ranking for multimedia retrieval. ACM Multimedia 2007- Pinto, Pérez-Sanjulián: Automatic query expansion and word sense disambiguation with

long and short queries using WordNet under vector model. 2008. (plný text článku)

- Gong, Cheang,Hou U: Multi-term Web Query Expansion Using WordNet. 2006. (plný text článku)

- Gong, Cheang,Hou U: Web Query Expansion by WordNet. 2005. (plný text článku)

- Deng, Dong, Socher, Li, Li, Fei-Fei: ImageNet: A Large-Scale Hierarchical Image Database. 2009. (plný text článku)

- Harris, Srinivasan: Comparing Crowd-Based, Game-Based, and Machine-Based Approaches in Initial Query and Query Refinement Tasks. 495-506

Ranking, combining metric spaces

Ranking, combining metric spaces

Information fusion

Information fusion

Kludas, Bruno, Marchand-Maillet: Information Fusion in Multimedia Information Retrieval. 2008

Kludas, Bruno, Marchand-Maillet: Information Fusion in Multimedia Information Retrieval. 2008- Zhou, Depeursinge, Muller: Information Fusion for Combining Visual and Textual Image Retrieval. Pattern Recognition 2010

- Moulin, Largeron, Gery: Impact of Visual Information on Text and Content Based Image Retrieval. 2010

- Clinchant, Ah-Pine, Csurka: Semantic Combination of Textual and Visual Information in Multimedia Retrieval. ICMR 2011

- Park, Nang: Content Based Web Image Retrieval System Using Both MPEG-7 Visual Descriptors and Textual Information. MMM 2007

- He, Xiong, Yang, Park: Using Multi-Modal Semantic Association Rules to fuse keywords and visual features automatically for Web image retrieval. Information Fusion 2011

- Depeursinge, Müller: Fusion Techniques for Combining Textual and Visual Information Retrieval. Chapter 6 of ImageCLEF Experimental Evaluation in Visual Information Retrieval, Springer 2010

- Arampatzis, Zagoris, Chatzichristofis: Fusion vs. Two-Stage for Multimodal Retrieval. ECIR 2011

- Kokar, Tomasik, Weyman: Formalizing classes of information fusion systems. Information Fusion 2004

- Multimedia Information Retrieval - keynote by Stephane Marchand-Maillet, 2012

Combining metric spaces

Combining metric spaces

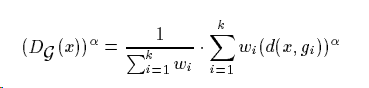

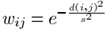

Some kind of dynamic aggregation

Some kind of dynamic aggregation

- Tung, Zhang, Koudas, Ooi: Similarity Search: A Matching Based Approach. VLDB 06

- Bustos, Skopal: Dynamic Similarity Search in MultiMetric Spaces. MIR 2006

- Bustos, Keim, Saupe, Schreck, Vranic: Using Entropy Impurity for Improved 3D Object Similarity Search. ICME 2004

- Bustos, Keim, Saupe, Schreck, Vranic: Automatic Selection and Combination of Descriptors

for Effective 3D Similarity Search. ISMSE 04

Query results clustering

Query results clustering

- Ji, Yao, Liu, Wang, Xu. A Novel Retrieval Refinement and Interaction Pattern by Exploring Result Correlations for Image Retrieval. 2008

- Chen, Wang, Krovetz. CLUE: Cluster-Based Retrieval of Images by Unsupervised Learning. IEEE Transactions on Image Processing, vol. 14, no. 8, August 2005

- Jia, Wang, Zhang, Hua. Finding Image Exemplars Using Fast Sparse Affinity Propagation. Proceeding of the 16th ACM international conference on Multimedia, 2008.

- Li, Dai, Xu, Er. Multilabel Neighborhood Propagation for Region-Based Image Retrieval. IEEE Transactions on Image Processing, 2007.

Query results reordering/ranking

Query results reordering/ranking

- Arampatzis, Zagoris, Chatzichristofos. Dynamic Two-Stage Image Retrieval from Large Multimodal Databases. ECIR 2011

- Ambai, Yoshida. Multiclass VisualRank: Image Ranking Method in Clustered Subsets Based on Visual Features. SIGIR 09

- Jing, Baluja. VisualRank: Applying PageRank to Large-Scale Image Search. IEEE TRANSACTIONS ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE, November 2008

- Jing, Baluja. PageRank for Product Image Search. WWW 2008, Beijing, China.

- Yiu, Mamoulis: Multi-dimensional top-k dominating queries. VLDB Journal 2009.

- Park, Baek, Lee: A Ranking Algorithm Using Dynamic Clustering for Content-Based Image Retrieval, 2002.

- Park, Baek, Lee: Majority Based Ranking Approach in Web Image Retrieval, CIVR 2003.

- Joyce M. dos Santos, Joao M. B. Cavalcanti, Patricia Correia Saraiva, Edleno Silva de Moura: Multimodal Re-ranking of Product Image Search Results. 62-73

- Thollard, Quénot: Content-Based Re-ranking of Text-Based Image Search Results. ECIR 2013, 618-629

Other

Other

Relevance feedback

Relevance feedback

Understanding relevance

Understanding relevance

Basic approaches in general

Basic approaches in general

Relevance feedback in image retrieval

Relevance feedback in image retrieval

- Ishikawa, Subramanya, Faloutsos: MindReader: Querying databases through multiple examples. VLDB 98.

- Rui, Huang, Ortega, Mehrotra: Relevance Feedback: A Power Tool in Interactive Content-Based Image Retrieval. IEEE Trans. Circuits and Systems for Video Technology, 8(5):644–655, 1998.

- Zhou, Huang: Relevance feedback in image retrieval: A comprehensive review. 2002.

- Shen, Jiang, Tan, Huang, Zhou: Speed up interactive image retrieval. 2008.

- Huiskes, Lew: Performance Evaluation of Relevance Feedback Methods. CIVR’08.

- Wu, Faloutsos, Sycara, Payne: FALCON: Feedback Adaptive Loop for Content-Based Retrieval. VLDB 2000.

- Razente, Barioni, Traina, Traina: Aggregate Similarity Queries in Relevance Feedback Methods for Content-based Image Retrieval. SAC’08.

- Hua, Yu, Liu: Query Decomposition: A Multiple Neighborhood Approach to Relevance Feedback Processing in Content-based Image Retrieva. ICDE’06

- Vu, Cheng, Hua: Image Retrieval in Multipoint Queries. 2008.

Image annotation

Image annotation

Surveys

Surveys

Model-based approaches

Model-based approaches

Search-based approaches

Search-based approaches

Text-based approaches

Text-based approaches

- Eickhoff, Li, de Vries: Exploiting User Comments for Audio-Visual Content Indexing and Retrieval. ECIR 2013

- Noel, Peterson: Context-Driven Image Annotation Using ImageNet. 26th International Florida Artificial Intelligence Research Society Conference, 2013

- Du, Rau, Huang, Chen: Improving the quality of tags using state transition on progressive image search and recommendation system. SMC 2012

- Sun, Bhowmick, Chong: Social image tag recommendation by concept matching. ACM Multimedia 2011

- Lux, Pitman, Marques: Can Global Visual Features Improve Tag Recommendation for Image Annotation? Future Internet 2010

Annotation refinement techniques

Annotation refinement techniques

- Belém, Martins, Almeida, Gonçalves: Exploiting Novelty and Diversity in Tag Recommendation. ECIR 2013

- McParlane, Jose: Exploiting Time in Automatic Image Tagging. ECIR 2013, 520-531

- Kern, R.; Granitzer, M.; Pammer, V. Extending Folksonomies for Image Tagging. In Proceedings of 9th International Workshop on Image Analysis for Multimedia Interactive Services, Klagenfurt, Austria, 7–9 May 2008.

- Graham, R.; Caverlee, J. Exploring Feedback Models in Interactive Tagging. In Proceedings of

the 2008 IEEE/WIC/ACM International Conference on Web Intelligence and Intelligent Agent

Technology, Sydney, Australia, 9–12 December 2008; IEEE Computer Society: Washington, DC,

USA, 2008; pp. 141–147.

Domain specific solutions

Domain specific solutions

Manual annotation, ground truth acquisition

Manual annotation, ground truth acquisition

- Ames, Naaman: Why we tag: motivations for annotation in mobile and online media. In: SIGCHI 2007 (2007)

Resources

Resources

Other

Other

- Deselaers, Ferrari: Visual and semantic similarity in ImageNet. Computer Vision and Pattern Recognition (CVPR), 2011.

- Heesch, Yavlinsky, Rüger: NNk Networks and Automated Annotation for Browsing Large Image Collections from the World Wide Web. Multimedia 2006.

- Noah, Ali, Alhadi, Kassim: Going Beyond the Surrounding Text to Semantically Annotate and Search Digital Images. ACIIDS 2010.

- Nowak, Huiskes: New Strategies for Image Annotation: Overview of the Photo Annotation Task at ImageCLEF 2010. ImageCLEF 2011.

- Barnard, Duygulu, Forsyth, Freitas, Blei, Jordan: Matching Words and Pictures. 2003. (plný text článku)

- Wang, Zhang, Jing, Ma: Annosearch: Image auto-annotation by search. CVPR 2006. (plný text článku)

- Enser, Sandom, Lewis: Automatic Annotation of Images from the Practitioner Perspective. CIVR 2005: 497-506 (plný text článku)

- Sinha, Jain: Semantics in Digital Photos: A Contenxtual Analysis. In Proceedings of 'ICSC

'08: The 2008 IEEE International Conference on Semantic Computing, Santa Clara, CA, USA,

4–7 August 2008; IEEE Computer Society: Washington, DC, USA, pp. 58–65.

Summarization of image collections

Summarization of image collections

- Rudinac, Larson, Hanjalic: Learning Crowdsourced User Preferences for Visual Summarization of Image Collections. IEEE Transactions on Multimedia, 2013

- Metze, Ding, Younessian, Hauptmann: Beyond audio and video retrieval: topic-oriented multimedia summarization. International Journal of Multimedia Information Retrieval, 2013

- Tan, Song, Liu, Xie: ImageHive: Interactive Content-Aware Image Summarization. IEEE Computer Graphics and Applications, 2012

- Zheng, Herranz, Jiang: Flexible navigation in smartphones and tablets using scalable storyboards. ICMR 2013

- Liu, Wang, Sun, Zheng, Tang, Shum: Picture Collage. IEEE Transactions on Multimedia, 2009

- Ekhtiyar, Sheida, Amintoosi: Picture Collage with Genetic Algorithm and Stereo vision. CoRR, 2012

- Zhang, Huang: Hierarchical Narrative Collage For Digital Photo Album. Computer Graphics Foru, 2012

- Nenkova, McKeown: A Survey of Text Summarization Techniques. Mining Text Data, 2012

Ontologies

Ontologies

Evaluation

Evaluation

Building ground truth

Building ground truth

- Yao, Yang, Zhu: Introduction to a Large-Scale General Purpose Ground Truth Database: Methodology, Annotation Tool and Benchmarks. 2007. (plný text článku)

- Muller, Geissbuhler, Marchand–Maillet, Clough. Benchmarking Image Retrieval Applications. 2004. (plný text článku)

- Alonso, Mizzaro: Can we get rid of trec assessors? Using mechanical turk for relevance assessment. In: SIGIR 2009 Workshop on the Future of IR Evaluation (2009)

Performance metrics

Performance metrics

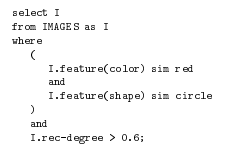

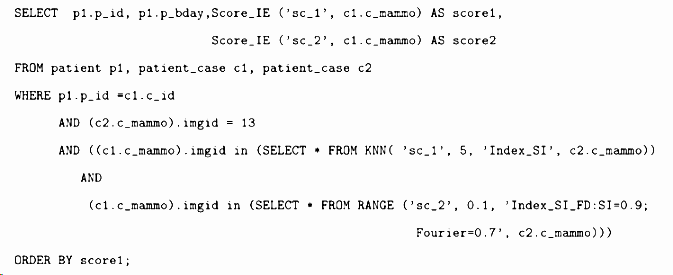

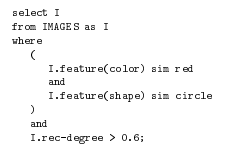

Query Language

Query Language

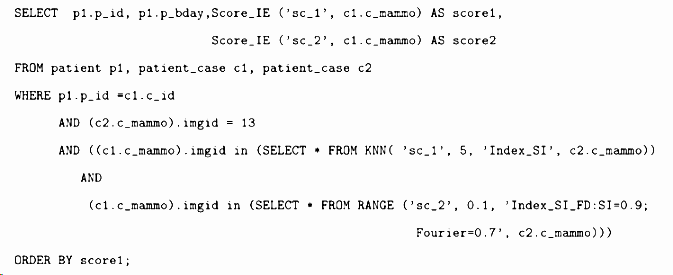

- Amato, Mainetto, Savino: A Query Language for Similarity-based Retrievla of Multimedia Data. Advances in Databases and Information Systems 1997.

- Adali, Bonatti, Sapino, Subrahmanian: A multi-similarity algebra. SIGMOD 98

- Town, Sinclair: Ontological Query Language for Content Based Image Retrieval. ANSS’03.

- Döller, Kosch, Wolf, Gruhne: Towards an MPEG-7 Query Language. SITIS 2006

- Mamou, Mass, Shmueli-Scheuer, Sznajder: A Query Language for Multimedia Content. Sigir’07.

- Gruhne, Tous, Delgado, Doeller, Kosch: MP7QF: An MPEG-7 Query Format. 2007.

- Guliato, de Melo, Rangayyan, Soares: POSTGRESQL-IE: An Image-handling Extension for PostgreSQL. Journal of Digital Imaging, Vol 22, No 2, 2009

- Pein, Lu, Renz: An Extensible Query Language for Content Based Image Retrieval based on Lucene. 2008

- Döller, Tous, Gruhne, Yoon, Sano, Burnett: The MPEG Query Format: Unifying Access to Multimedia Retrieval Systems. IEEE MultiMedia, 2008.

Barioni, Razente, Traina, Traina: Seamlessly integrating similarity queries in SQL. Software - Practice and Experience, 2009

Barioni, Razente, Traina, Traina: Seamlessly integrating similarity queries in SQL. Software - Practice and Experience, 2009- Silva, Aref, Larson, Pearson, Ali: Similarity queries: their conceptual evaluation, transformations, and processing. VLDB Journal, June 2013

Other interesting papers and ideas

Other interesting papers and ideas

Datta, Li, Wang: Content-Based Image Retrieval - Approaches and Trends of the New Age. MIR’05

plný text článku,

bibtex

@inproceedings{Datta:2005:CIR:1101826.1101866,

author = {Datta, Ritendra and Li, Jia and Wang, James Z.},

title = {Content-based image retrieval: approaches and trends of the new age},

booktitle = {Proceedings of the 7th ACM SIGMM international workshop on Multimedia information retrieval},

series = {MIR '05},

year = {2005},

isbn = {1-59593-244-5},

location = {Hilton, Singapore},

pages = {253--262},

numpages = {10},

url = {http://doi.acm.org/10.1145/1101826.1101866},

doi = {http://doi.acm.org/10.1145/1101826.1101866},

acmid = {1101866},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {annotation, content-based image retrieval},

}

Pěkný úvod do CBIR (co je obtížnější ve strovnání s text retrieval), že je to důležité zkoumat

(dokumentováno nárustem publikací v této oblasti za poslední roky; 120 užitečných citací!). Obsahuje stručnou diskuzi

o používaných deskriptorech, shrnutí základních přístupů k CBIR (semantics-sensitive approach,

use of hierarchical perceptual grouping

of primitive image features and their inter-relationships to

characterize structure; Clustering has

been applied to image retrieval to help improve interface

design, visualization, and result pre-processing). Annotation and Concept Detection

- velmi přínosné, pokud se podaří anotaci udělat, to je ale velmi obtížné,

zatím se to zvládá jen pro hrubé zařazení do kategorií; pomoci může učení

z předchozích výsledků. Velmi dobré zmapování základních přístupů

Relevance feedback. Diskuze o HW urychlení a vhodných uživatelských

rozhraních.

- The trends in these graphs indicate a roughly exponential growth in interest in image

retrieval and closely related topics. We also note that growth in the field has been particularly

strong over the last five years, spanning new techniques, new support systems, and diverse

application domains. Yet, a brief scanning of about 300 relevant papers published in the

last five years revealed that less than 20% were concerned with applications or real-world

systems. This may not be a cause for concern, since the theoretical foundation (if any

such exists) behind how we humans interpret images is still an open problem. But then, with

hundreds of different approaches proposed so far, and no consensus reached on any, it is rather

optimistic to believe that we will chance upon a reliable one in the near future. Instead, it may make

more sense to build systems that are useful, even if their use is limited to specific domains.

A way to see this is that natural language interpretation is an unsolved problem, yet text-based

search engines have proved very useful.

- Relevance feedback (RF) is a query modification technique, originating in information retrieval, that

attempts to capture the user’s precise needs through iterative feedback and query refinement....

One problem with RF is that after every round of user interaction, usually the top results with respect to the

query have to be recomputed using a modified similarity measure. A way to speed up this nearest-neighbor search has

been proposed in [109]. Another issue is the user’s patience in supporting multi-round feedbacks. A way to reduce the

user’s interaction is to incorporate logged feedback history into the current query [41]. History of usage

can also help in capturing the relationship between high level semantics and low level features [36].

We can also view RF as an active learning process, where the learner chooses an appropriate

subset for feedback from the user in each round based on her previous rounds of feedback, instead

of choosing a random subset. Active learning using SVMs was introduced into the field of image

retrieval in [95]. Extensions to the active learning process have also been proposed [31, 37]. ...

With the increase in popularity of region-based image retrieval [12, 104], attempts have been

made to incorporate the region factor into RF using query point movement and support vector

machines [49, 50]. A tree-structured self-organizing map has been used as an underlying technique

for RF [58] in a content-based image retrieval system [57]. Probabilistic approaches have been

taken in [21, 91, 100]. A clustering based approach to RF incorporating the user’s perception

in case of complex queries has been studied in [53]. In [39], manifold learning on the user’s feedback

based on geometric intuitions about the underlying feature space is proposed. While most RF algorithms

proposed deal with a two-class problem, i.e., relevant or irrelevant images, another way of looking

at RF is to consider multiple relevant and irrelevant groups of images using an appropriate user

interface [40, 79, 118]. For example, if the user is looking for cars, then she can highlight

groups of blue cars and red cars as relevant examples, since it may not be possible to

represent the concept car uniformly in any low level feature space. Yet another deviation from

norm is the use of multilevel relevance scores to incorporate the relative degrees of relevance

of certain images to the user’s query [108].

Datta, Joshi, Li, Wang: Image retrieval: Ideas, influences, and trends of the new age. ACM Computation Surveys, 2008.

plný text článku,

bibtex

@article{Datta:2008:IRI:1348246.1348248,

author = {Datta, Ritendra and Joshi, Dhiraj and Li, Jia and Wang, James Z.},

title = {Image retrieval: Ideas, influences, and trends of the new age},

journal = {ACM Comput. Surv.},

volume = {40},

issue = {2},

month = {May},

year = {2008},

issn = {0360-0300},

pages = {5:1--5:60},

articleno = {5},

numpages = {60},

url = {http://doi.acm.org/10.1145/1348246.1348248},

doi = {http://doi.acm.org/10.1145/1348246.1348248},

acmid = {1348248},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {Content-based image retrieval, annotation, learning, modeling, tagging},

}

Rozšířená verze předchozího. V úvodu stručné shrnutí survey Smeulders00.

Kherfi, Ziou, Bernardi: Image Retrieval From the World Wide Web: Issues, Techniques, and Systems. ACM computing surveys, 2004.

plný text článku,

bibtex

@article{Kherfi:2004:IRW:1013208.1013210,

author = {Kherfi, M. L. and Ziou, D. and Bernardi, A.},

title = {Image Retrieval from the World Wide Web: Issues, Techniques, and Systems},

journal = {ACM Comput. Surv.},

volume = {36},

issue = {1},

month = {March},

year = {2004},

issn = {0360-0300},

pages = {35--67},

numpages = {33},

url = {http://doi.acm.org/10.1145/1013208.1013210},

doi = {http://doi.acm.org/10.1145/1013208.1013210},

acmid = {1013210},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {Image-retrieval, World Wide Web, crawling, feature extraction and selection, indexing, relevance feedback, search, similarity},

}

Proč potřeba web image search. Applications - různé kategorie, hezky rozebrané. Jaké služby

by lidé od web search engine očekávali: query-based retrieval, browsing, summary of a set of images.

Dále popis několika existujících image search systémů, které většinou kombinují text a visual search.

Dobrý přehled zdrojů a funkčnosti (charakteristika, které deskriptory, jaké dotazy podporuje).

V další sekci se řeší návrh Web image search engine, a to konkrétně tyto části:

data gathering, the identification and the estimation of image descriptors, similarity and matching,

indexing, query specification, retrieval and refinement, Web coverage, and performance evaluation.

Ohledně text search pěkný přehled toho, které kusy stránky který systém využívá. Odkaz na článek,

který řeší, jak poznat relevantní slova ve stránce. Dále dobrý přehled možností query formulation.

Doporučení používat text i image. V další části zadefinován evaluation test-bed a metriky porovnávání

systémů včetně netradičních jako ease of use, numer of iterations. Nakonec různé open issues

- např. pochopení potřeb uživatele, jsou tam nějaké citace, i když starších článků.

- It is very useful for a Web image search system to offer an image taxonomy based on subjects such

as art and culture, sports, world regions, technology etc. ... WebSeek offers an online catalog.

- page zero problem: finding a good query image to initiate the retrieval. To avoid falling into

this problem, the system must provide the user with a set of candidate images that are representative of the entire visual

content of the Web, for example, by choosing an image from each category. If none of these images is appropriate, the user

should be allowed to choose to view more candidate images. Another solution to the page zero problem, used in the PicToSeek

system, involves asking the user to provide an initial image; however, it is not always easy for users to find such an image.

ImageRover and WebSeek ask the user to introduce a textual query at the beginning, after which both textual and visual

refinement are possible. In Kherfi et al. [2003b], we showed that the use of a positive example together with a negative example

can also help to mitigate the page zero problem.

Alemu, Koh, Ikram, Kim: Image Retrieval in Multimedia Databases: A Survey. Int. Conf. on Intelligent Information Hiding and Multimedia Signal Processing

plný text článku,

bibtex

@inproceedings{DBLP:conf/iih-msp/AlemuKIK09,

author = {Yihun Alemu and

Jong-bin Koh and

Muhammad Ikram and

Dong-Kyoo Kim},

title = {Image Retrieval in Multimedia Databases: A Survey},

booktitle = {IIH-MSP},

year = {2009},

pages = {681-689},

ee = {http://doi.ieeecomputersociety.org/10.1109/IIH-MSP.2009.159},

crossref = {DBLP:conf/iih-msp/2009},

bibsource = {DBLP, http://dblp.uni-trier.de}

}

@proceedings{DBLP:conf/iih-msp/2009,

editor = {Jeng-Shyang Pan and

Yen-Wei Chen and

Lakhmi C. Jain},

title = {Fifth International Conference on Intelligent Information

Hiding and Multimedia Signal Processing (IIH-MSP 2009),

Kyoto, Japan, 12-14 September, 2009, Proceedings},

booktitle = {IIH-MSP},

publisher = {IEEE Computer Society},

year = {2009},

isbn = {978-1-4244-4717-6},

ee = {http://ieeexplore.ieee.org/xpl/mostRecentIssue.jsp?punumber=5337074},

bibsource = {DBLP, http://dblp.uni-trier.de}

}

Přehled přístupů - text search, CBIR, ontology-based image retrieval. Nejde to moc do hloubky,

ale pro rychlý přehled dobré, dá se citovat pro úvod do text-based a content-based image search.

Mueller, Deselaers: Image Retrieval. Tutorial TrebleCLEF Summer School 2009.

web s prezentacemi tutorialu

Hare, Lewis, Enser, Sandom: Mind the Gap: Another look at the problem of the semantic gap in image retrieval. 2006

plný text článku,

bibtex

@article{Hare_2006,

title={Mind the gap: another look at the problem of the semantic gap in image retrieval},

volume={6073},

url={http://link.aip.org/link/PSISDG/v6073/i1/p607309/s1&Agg=doi},

number={1},

journal={Proceedings of SPIE},

publisher={Spie},

author={Hare, J S},

year={2006},

pages={607309--607309-12}

}

Uvažuje sémantické dotazy "Najdi obrázek, kde je vlk ve sněhu na silnici v Atlantě z roku 2000".

Semantic gap rozdělen na několik částí: obrázek => reprezentace => objekty => klíčová slova => sémantika.

Popsány existující přístupy k automatické anotaci klíčovými slovy - to označují jako přístup

zdola. Přístup shora je přes ontologie.

Guan, Antani, Long, Thoma: Bridging the semantic gap using ranking svm for image retrieval. 2009

plný text článku,

bibtex

@INPROCEEDINGS{5193057,

author={Haiying Guan and Antani, S. and Long, L.R. and Thoma, G.R.},

booktitle={Biomedical Imaging: From Nano to Macro, 2009. ISBI '09. IEEE International Symposium on},

title={Bridging the semantic gap using Ranking SVM for image retrieval},

year={2009},

month={28 2009-july 1},

volume={},

number={},

pages={354 -357},

keywords={X-ray imaging;content-based image retrieval;image features;ranking SVM;ranking support vector machine algorithm;semantic concepts;spine;supervised learning algorithm;vertebra shape retrieval;bone;diagnostic radiography;image retrieval;learning (artificial intelligence);medical image processing;support vector machines;},

doi={10.1109/ISBI.2009.5193057},

ISSN={1945-7928},

}

Pro kolekci rentgenových obrázků páteře aplikují učící algoritmus založený na SVM,

inovací je, že se nehledají kategorie, ale optimální ranking. Stručný, jasný a výstižný

článek, pro specializované kolekce je to asi dobré řešení.

Liu, Zhang, Lu, Ma: A survey of content-based image retrieval with high-level semantics. 2006.

plný text článku,

bibtex

@article{Liu2007262,

title = "A survey of content-based image retrieval with high-level semantics",

journal = "Pattern Recognition",

volume = "40",

number = "1",

pages = "262 - 282",

year = "2007",

note = "",

issn = "0031-3203",

doi = "DOI: 10.1016/j.patcog.2006.04.045",

url = "http://www.sciencedirect.com/science/article/pii/S0031320306002184",

author = "Ying Liu and Dengsheng Zhang and Guojun Lu and Wei-Ying Ma",

keywords = "Content-based image retrieval",

keywords = "Semantic gap",

keywords = "High-level semantics",

keywords = "Survey"

}

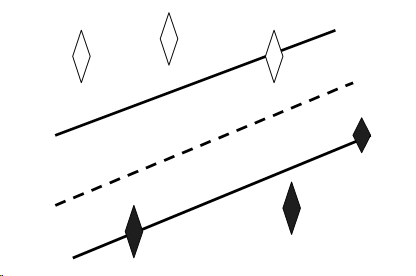

Survey přístupů k řešení Semantic gap. Identifikují pět hlavních přístupů: (1) using object ontology to define high-level concepts;

(2) using machine learning methods to associate low-level features with query concepts;

(3) using relevance feedback to learn users’ intention;

(4) generating semantic template to support high-level image retrieval;

(5) fusing the evidences from HTML text and the visual content of images for WWW image retrieval.

V úvodní části velmi srozumitelně popsány metody segmentace a extrakce deskriptorů.

Object ontology: nasegmentované úseky se popíší pomocí vlastností deskriptorů, třeba světle modrý,

jednolitý, umístěný nahoře. Na další úrovni se těmto vlastnostem přiřadí pojem obloha. Způsoby pojmenování

barev, asi se to zvlášť hodí na vyhledávání v uměleckých kolekcích.

Machine learning: supervised - účelem je naučit systém správně kategorizovat či pojmenovávat; unsuperised

- snaží se podchytit, jak jsou data organizována/clusterována. Supervised přístupy: SVM, Bayesian classification,

neuronové sítě, rozhodovací stromy, bootstrapping. Rozhodovací stromy někdo používá pro relevance feedback

(rozhodování relevantní/nerelevantní). Typickým příkladem unsupervised learning je image clustering (metody

k-means clustering, Normalized cut, CLUE; locality preserving clustering). Učení se může používat pro object recognition.

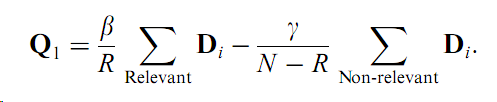

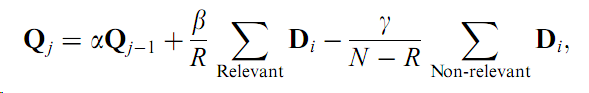

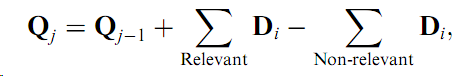

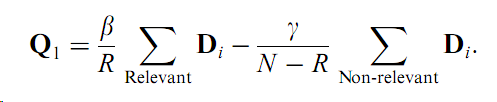

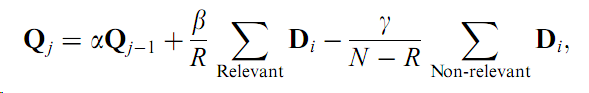

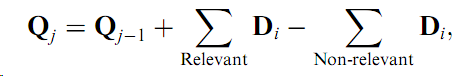

Relevance feedback: online metoda, hlavní přístupy: změna vah, posouvání query point (často využívá Rocchio vzorec). Obě tyto

metody používají nearest-neighbor sampling. Při určování změněného dotazu se často používají machine learning techniques.

Semantic templates: mapování mezi sémantickými koncepty a nízkoúrovňovými deskriptory. Semantic template obvykle definována

jako reprezentativní vlastnost pro daný koncept odvozená ze vzorových objektů. Může se vytvářet během relevance feedback.

Web image retrieval: má specifické vlastnosti, může využívat další informace získané z webové stránky (URL, title, alt, description,

hyperlinks).

Článek dále obsahuje obsáhlou diskuzi o testovacích datech, zejména o výhodách a nevýhodách nejpoužívanější

kolekce Corel. Dále se řeší vhodné slovníky pro sémantické koncepty. Nakonec řeší evaluační metody: precision, recall,

jejich poměry, poměr ku scope, rank.

V další sekci zmiňují potřebu dotazovacího jazyka, popisují několik existujících návrhů, které jsou ale všechny

sémantické. Také se stručně věnují indexovacím strukturám a upozorňují, že často nejsou specializované na

vlastnosti obrázků (existují i některé, které se o to snaží).

Jain, Sinha: Content Without Context is Meaningless. MM 2010.

plný text článku,

bibtex

@inproceedings{Jain:2010:CWC:1873951.1874199,

author = {Jain, Ramesh and Sinha, Pinaki},

title = {Content without context is meaningless},

booktitle = {Proceedings of the international conference on Multimedia},

series = {MM '10},

year = {2010},

isbn = {978-1-60558-933-6},

location = {Firenze, Italy},

pages = {1259--1268},

numpages = {10},

url = {http://doi.acm.org/10.1145/1873951.1874199},

doi = {http://doi.acm.org/10.1145/1873951.1874199},

acmid = {1874199},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {content, context, exif, image, mobile, perception, search},

}

Kritika současného trendu výzkumu - děláme content-based, kašleme na context, přitom

v praxi se používá zrovna context a to naše ne. Příliš mnoho problémů se snažíme řešit

strojovým učením ("Machine Learning Hammer"). Zmiňují otázku, jestli současný výzkum

vůbec řeší ten správný problém (k čemu se používá které medium, jaké informace z něj

jsou podstatné...). Multimedia content problem má být "perception problem", kde je

nutno vzít do úvahy prostředí, medium a příjemce. Hezké shrnutí problematiky Image understanding,

proč je to složité a proč to jde se současným přístupem dělat jen pro omezené domény.

Lidské vnímání je hodně založeno na context - naší znalosti prostředí a zkušenosti.

V počítačovém zpracování může context pomoci ke zúžení prohledávaného prostoru, což

je zásadní, protože jinak je ten prostor příliš velký a různorodý. To je problém možná

ani ne výkonnostní, ale zejména je tam příliš mnoho šumu.

Tipy na konkrétní využití context: EXIF metadata - z doby expozice se třeba dá odhadovat,

zda je fotka denní/noční, venku/vevnitř apod., z ohniskové délky se dá odhadovat velikost.

Eickhoff, Li, de Vries: Exploiting User Comments for Audio-Visual Content Indexing and Retrieval. ECIR 2013

plný text článku,

bibtex

@inproceedings{DBLP:conf/ecir/EickhoffLV13,

author = {Carsten Eickhoff and

Wen Li and

Arjen P. de Vries},

title = {Exploiting User Comments for Audio-Visual Content Indexing

and Retrieval},

booktitle = {ECIR},

year = {2013},

pages = {38-49},

ee = {http://dx.doi.org/10.1007/978-3-642-36973-5_4},

crossref = {DBLP:conf/ecir/2013},

bibsource = {DBLP, http://dblp.uni-trier.de}

}

@proceedings{DBLP:conf/ecir/2013,

editor = {Pavel Serdyukov and

Pavel Braslavski and

Sergei O. Kuznetsov and

Jaap Kamps and

Stefan M. R{\"u}ger and

Eugene Agichtein and

Ilya Segalovich and

Emine Yilmaz},

title = {Advances in Information Retrieval - 35th European Conference

on IR Research, ECIR 2013, Moscow, Russia, March 24-27,

2013. Proceedings},

booktitle = {ECIR},

publisher = {Springer},

series = {Lecture Notes in Computer Science},

volume = {7814},

year = {2013},

isbn = {978-3-642-36972-8},

ee = {http://dx.doi.org/10.1007/978-3-642-36973-5},

bibsource = {DBLP, http://dblp.uni-trier.de}

}

Didn't read in detail, but the main idea is pretty simple and interesting at the same time: use a new modality

for video search - tags extracted from YouTube comments. Some time series analysis and filtering by Wikipedia are used.

Noel, Peterson: Context-Driven Image Annotation Using ImageNet. 26th International Florida Artificial Intelligence Research Society Conference, 2013

plný text článku,

bibtex

@inproceedings{DBLP:conf/flairs/NoelP13,

author = {George E. Noel and

Gilbert L. Peterson},

title = {Context-Driven Image Annotation Using ImageNet},

booktitle = {FLAIRS Conference},

year = {2013},

ee = {http://www.aaai.org/ocs/index.php/FLAIRS/FLAIRS13/paper/view/5892},

crossref = {DBLP:conf/flairs/2013},

bibsource = {DBLP, http://dblp.uni-trier.de}

}

@proceedings{DBLP:conf/flairs/2013,

editor = {Chutima Boonthum-Denecke and

G. Michael Youngblood},

title = {Proceedings of the Twenty-Sixth International Florida Artificial

Intelligence Research Society Conference, FLAIRS 2013, St.

Pete Beach, Florida. May 22-24, 2013},

booktitle = {FLAIRS Conference},

publisher = {AAAI Press},

year = {2013},

ee = {http://www.aaai.org/Library/FLAIRS/flairs13contents.php},

bibsource = {DBLP, http://dblp.uni-trier.de}

}

Annotate images using visual content and context = keywords from title/surrounding page/whatever. Use the

keywords to determine synsets from WordNet, then use appropriate ImageNet data to compare with the image

to be annotated, select the most probable synsets. Face classifier used to verify people-related concepts.

Lew, Sebe, Djeraba, Jain: Content-Based Multimedia Information Retrieval: State of the Art and Challenges. ACM 06.

plný text článku,

bibtex

@article{Lew:2006:CMI:1126004.1126005,

author = {Lew, Michael S. and Sebe, Nicu and Djeraba, Chabane and Jain, Ramesh},

title = {Content-based multimedia information retrieval: State of the art and challenges},

journal = {ACM Trans. Multimedia Comput. Commun. Appl.},

volume = {2},

issue = {1},

month = {February},

year = {2006},

issn = {1551-6857},

pages = {1--19},

numpages = {19},

url = {http://doi.acm.org/10.1145/1126004.1126005},

doi = {http://doi.acm.org/10.1145/1126004.1126005},

acmid = {1126005},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {Multimedia information retrieval, audio retrieval, human-computer interaction, image databases, image search, multimedia indexing, video retrieval},

}

Jednou z hlavních výzev je Semantic gap - vyhledávání pomocí low-level deskriptorů

je vhodné jen pro některé aplikace a znalé uživatele, pro běžného uživatele

je potřeba "přeložit" deskriptory do srozumitelných pojmů. Opět zmiňuje problém

objektivních benchmarků pro srovnávání. Odkazy na různé studie, co uživatelé

potřebují.

- 2.2.2 Relevance Feedback. Beyond the one-shot queries in the early similarity-based search systems,

the next generation of systems attempted to integrate continuous feedback from the user in order

to learn more about the user query. The interactive process of asking the user a sequential set of

questions after each round of results was called relevance feedback because of its similarity to older

pure text approaches. Relevance feedback can be considered a special case of emergent semantics. Other

names have included query re?nement, interactive search, and active learning fromthe computer vision

literature. The fundamental idea behind relevance feedback is to show the user a list of candidate images,

ask the user to decide whether each image is relevant or irrelevant, and modify the parameter space,

semantic space, feature space, or classi?cation space to re?ect the relevant and irrelevant examples.

In the simplest relevance feedback method from Rocchio [1971], the idea is to move the query point

toward the relevant examples and away from the irrelevant examples. In principle, one general view

is to view relevance feedback as a particular type of pattern classi?cation in which the positive and

negative examples are found from the relevant and irrelevant labels, respectively.

Bozzon, Fraternali: Multimedia and Multimodal Information Retrieval. Search Computing, Chapter 8, Springer 2010.

plný text článku,

bibtex

@incollection {springerlink:10.1007/978-3-642-12310-8_8,

author = {Bozzon, Alessandro and Fraternali, Piero},

affiliation = {Politecnico di Milano Dipartimento di Elettronica e Informazione Piazza Leonardo da Vinci 32 20133 Milano Italy},

title = {Chapter 8: Multimedia and Multimodal Information Retrieval},

booktitle = {Search Computing},

series = {Lecture Notes in Computer Science},

editor = {Ceri, Stefano and Brambilla, Marco},

publisher = {Springer Berlin / Heidelberg},

isbn = {},

pages = {135-155},

volume = {5950},

url = {http://dx.doi.org/10.1007/978-3-642-12310-8_8},

note = {10.1007/978-3-642-12310-8_8},

year = {2010}

}

Survey k multimodal information retrieval, obsahuje hezké formulace základních problémů MIR, jednodlivé části

procesu od data acquisition po presentation. Je tam také kapitolka ke query languages.

Rui, Huang, Chang: Image Retrieval: Current Techniques, Promising Directions, and Open Issues. Journal of Visual Communication and Image Representation, Volume 10, Issue 1, 1999.

plný text článku,

bibtex

@ARTICLE{Rui99imageretrieval:,

author = {Yong Rui and Thomas S. Huang and Shih-fu Chang},

title = {Image Retrieval: Current Techniques, Promising Directions And Open Issues},

journal = {Journal of Visual Communication and Image Representation},

year = {1999},

volume = {10},

pages = {39--62}

}

Úvod do Content-Based Image Retrieval - historie, hlavní problémy. Hlavní problémy

jsou detailně rozebrány:

- visual features, jejich reprezentace (hezky popsány různé

přístupy, jak popsat barvy, ..., a jak počítat podobnost)

- indexování: jako základní přístupy uvádějí dimension reduction a potom různé stromové indexy (R-tree, k-d tree).

Jako nové přístupy zmiňují clustering a neural nets.

- state-of-the-art komerční a akademické systémy: většinou podporují něco z: random browsing, search by example,

search by sketch, search by text (including key word or speech), navigation with customized image categories.

Popis několika systémů (QBIC, MARS, WebSeek, ...)

Dále navrhují směry budoucího výzkumu: human in the loop, relevance feedback, web-oriented systems,

high-dimensional indexing, performance evaluation criterion a testbed.

Veltkamp, Tanase: Content-Based Image Retrieval Systems: A Survey. 2000.

plný text článku

bibtex

@techreport{citeulike:3430154,

abstract = {In many areas of commerce, government, academia, and hospitals, large collections of digital images are being created. Many of these collections are the product of digitizing existing collections of},

author = {Veltkamp, Remco C. and Tanase, Mirela},

citeulike-article-id = {3430154},

citeulike-linkout-0 = {http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.31.7344},

institution = {Department of Computing Science, Utrecht University},

keywords = {retrieval, survey},

posted-at = {2008-10-20 09:24:50},

priority = {2},

title = {Content-Based Image Retrieval Systems: {A} Survey},

url = {http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.31.7344},

year = {2002}

}

Popis několika desítek CBIR systémů. Jak jsou objekty popsány, jak se zadává a vyhodnocuje

dotaz, jak jsou prezentovány výsledky. U některých zmíněn a stručně popsán relevance feedback.

Smeulders, Worring, Santini, Gupta, Jain: Content-Based Image Retrieval at the End of the Early Years. 2000.

plný text článku,

bibtex

@ARTICLE{895972,

author={Smeulders, A.W.M. and Worring, M. and Santini, S. and Gupta, A. and Jain, R.},

journal={Pattern Analysis and Machine Intelligence, IEEE Transactions on}, title={Content-based image retrieval at the end of the early years},

year={2000},

month={dec},

volume={22},

number={12},

pages={1349 -1380},

keywords={accumulative features;content-based image retrieval;databases;global features;local geometry;pictures;salient points;semantic gap;semantics;sensory gap;similarity;system architecture;system engineering;use patterns;computer vision;content-based retrieval;image colour analysis;image retrieval;image segmentation;image texture;reviews;visual databases;},

doi={10.1109/34.895972},

ISSN={0162-8828},

}

Ashley, Flickner, Hafner, Lee, Niblack, Petkovic: The Query By Image Content (QBIC) System. SIGMOD 1995.

plný text článku,

bibtex

@inproceedings{Ashley:1995:QIC:223784.223888,

author = {Ashley, Jonathan and Flickner, Myron and Hafner, James and Lee, Denis and Niblack, Wayne and Petkovic, Dragutin},

title = {The query by image content (QBIC) system},

booktitle = {Proceedings of the 1995 ACM SIGMOD international conference on Management of data},

series = {SIGMOD '95},

year = {1995},

isbn = {0-89791-731-6},

location = {San Jose, California, United States},

pages = {475--},

url = {http://doi.acm.org/10.1145/223784.223888},

doi = {http://doi.acm.org/10.1145/223784.223888},

acmid = {223888},

publisher = {ACM},

address = {New York, NY, USA},

}

Wang, Li, Wiederhold: SIMPLIcity: Semantics-Sensitive Integrated Matching for Picture LIbraries. IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 23, no. 9, pp. 947-963, 2001.

plný text článku,

bibtex

@ARTICLE{Wang01simplicity:semantics-sensitive,

author = {James Z. Wang and Jia Li and Gio Wiederhold},

title = {SIMPLIcity: Semantics-Sensitive Integrated Matching for Picture LIbraries},

journal = {IEEE Transactions on Pattern Analysis and Machine Intelligence},

year = {2001},

volume = {23},

pages = {947--963}

}

Mají databázi oztříděnou do několika kategorií - textured/nontextured, graph/photograph.

Několik vlastních deskriptorů - region-based, ale pak se to spojí do jednoho globálního.

Zkoušeno na 200K obrázcích.

Wei, Li, Sethi: Web-WISE: compressed image retrieval over the web . Multimedia Information Analysis and Retrieval, 1998.

plný text článku,

bibtex

@incollection{springerlink:10.1007/BFb0016487,

author = {Wei, Gang and Li, Dongge and Sethi, I.},

affiliation = {Wayne State University Vision and Neural Network Lab. Dept. of Computer Science 48202 Detroit MI 48202 Detroit MI},

title = {Web-WISE: compressed image retrieval over the web},

booktitle = {Multimedia Information Analysis and Retrieval},

series = {Lecture Notes in Computer Science},

editor = {Ip, Horace and Smeulders, Arnold},

publisher = {Springer Berlin / Heidelberg},

isbn = {},

pages = {33-46},

volume = {1464},

url = {http://dx.doi.org/10.1007/BFb0016487},

note = {10.1007/BFb0016487},

year = {1998}

}

Systém má tři části: stahování obrázků z webu, extrakce deskriptorů a zpracování dotazu. Color a texture global

descriptors (vlastní).

Quack, Monich, Thiele, Manjunath: Cortina: A System for Large-scale, Content-based Web Image Retrieval. ACM Multimedia 2004.

plný text článku,

bibtex

@inproceedings{Quack:2004:CSL:1027527.1027650,

author = {Quack, Till and M\"{o}nich, Ullrich and Thiele, Lars and Manjunath, B. S.},

title = {Cortina: a system for large-scale, content-based web image retrieval},

booktitle = {Proceedings of the 12th annual ACM international conference on Multimedia},

series = {MULTIMEDIA '04},

year = {2004},

isbn = {1-58113-893-8},

location = {New York, NY, USA},

pages = {508--511},

numpages = {4},

url = {http://doi.acm.org/10.1145/1027527.1027650},

doi = {http://doi.acm.org/10.1145/1027527.1027650},

acmid = {1027650},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {MPEG-7, WWW, association rules, clustering, large-scale, online, relevance feedback, semantics, web image retrieval},

}

3 million images. Visual features and collateral text. Search process consists of an

initial query-by-keyword or query-by-image and followed by relevance feedback on the visual appearance of the results.

Semantic relationships in the data are explored and exploited by data mining, and multiple feature

spaces are included in the search process. Uses four global MPEG7 feature descriptors for color and texture.

Batko, Falchi, Lucchese, Novak, Perego, Rabitti, Sedmidubsky, Zezula: Building a web-scale image similarity search system. Multimedia Tools and Applications 2010.

plný text článku,

bibtex

@article {springerlink:10.1007/s11042-009-0339-z,

author = {Batko, Michal and Falchi, Fabrizio and Lucchese, Claudio and Novak, David and Perego, Raffaele and Rabitti, Fausto and Sedmidubsky, Jan and Zezula, Pavel},

affiliation = {Masaryk University Brno Czech Republic},

title = {Building a web-scale image similarity search system},

journal = {Multimedia Tools and Applications},

publisher = {Springer Netherlands},

issn = {1380-7501},

keyword = {Computer Science},

pages = {599-629},

volume = {47},

issue = {3},

url = {http://dx.doi.org/10.1007/s11042-009-0339-z},

note = {10.1007/s11042-009-0339-z},

year = {2010}

}

Velký tučný článek o MUFINu. Na začátku dobré počítání exploze dat. Článek má dvě hlavní témata - budování CoPhIR kolekce

a vytvoření scalable search system. Porovnání centralized a distributed implementace, použití různých indexů.

Zagoris, Arampatzis, Chatzichristofis: www.MMRetrieval.net: A Multimodal Search Engine. SISAP 2010.

plný text článku,

bibtex

@inproceedings{Zagoris:2010:WMS:1862344.1862363,

author = {Zagoris, Konstantinos and Arampatzis, Avi and Chatzichristofis, Savvas A.},

title = {www.MMRetrieval.net: a multimodal search engine},

booktitle = {Proceedings of the Third International Conference on SImilarity Search and APplications},

series = {SISAP '10},

year = {2010},

isbn = {978-1-4503-0420-7},

location = {Istanbul, Turkey},

pages = {117--118},

numpages = {2},

url = {http://doi.acm.org/10.1145/1862344.1862363},

doi = {http://doi.acm.org/10.1145/1862344.1862363},

acmid = {1862363},

publisher = {ACM},

address = {New York, NY, USA},

}

Dvoustránkový paper o systému, používá distribuované indexy a paralelní vyhledávání (detaily neznámé),

modality kombinuje pomocí late fusion, uživatel si volí způsob kombinace a váhy.

Kludas, Bruno, Marchand-Maillet: Information Fusion in Multimedia Information Retrieval. 2008

plný text článku,

bibtex

@incollection {springerlink:10.1007/978-3-540-79860-6_12,

author = {Kludas, Jana and Bruno, Eric and Marchand-Maillet, Stéphane},

affiliation = {University of Geneva Switzerland},

title = {Information Fusion in Multimedia Information Retrieval},

booktitle = {Adaptive Multimedial Retrieval: Retrieval, User, and Semantics},

series = {Lecture Notes in Computer Science},

editor = {Boujemaa, Nozha and Detyniecki, Marcin and Nürnberger, Andreas},

publisher = {Springer Berlin / Heidelberg},

isbn = {},

pages = {147-159},

volume = {4918},

url = {http://dx.doi.org/10.1007/978-3-540-79860-6_12},

note = {10.1007/978-3-540-79860-6_12},

year = {2008}

}

Hezký přehled přístupů k information fusion, kategorizace, odkazy. V praktické části porovnání několika přístupů

- používají SVM klasifikátory, malé testovací datasety. Kategorizace podle počtu modalit a zdrojů. Multi-modal

fusion může být sériová, paralelní a hierarchická. Jiné dělené - complementary, cooperative a competitive fusion

(podle toho, jestli zdroje informace doplňují, nebo upřesňují výběr). Fusion může probíhat na různých úrovních

- data/feature, classifier/score, decision level. Performance improvement boundaries.

- The JDL working group defined information fusion as "an information process

that associates, correlates and combines data and information from single or

multiple sensors or sources to achieve refined estimates of parameters, characteristics, events and behaviors"

- Drawbacks in data and feature fusion are problems due to

the ’curse of dimensionality’, its computationally expensiveness and that it needs

a lot of training data

- It can be said to be throughout

faster because each modality is processed independently which is leading to a

dimensionality reduction. Decision fusion is however seen as a very rigid solution,

because at this level of processing only limited information is left.

- For information retrieval systems the situation is not as trivial. For

example, three different effects in rank aggregation tasks can be exploited with

fusion [4]:

(1) Skimming effect: the lists include diverse and relevant items

(2) Chorus effect: the lists contain similar and relevant items

(3) Dark Horse effect: unusually accurate result of one source

According to the theory presented in the last section, it is impossible to exploit

all effects within one approach because the required complementary (1) and

cooperative (2) strategy are contradictory.

Zhou, Depeursinge, Muller: Information Fusion for Combining Visual and Textual Image Retrieval. Pattern Recognition 2010

plný text článku,

bibtex

@INPROCEEDINGS{5595739,

author={Xin Zhou and Depeursinge, A. and Muller, H.},

booktitle={Pattern Recognition (ICPR), 2010 20th International Conference on}, title={Information Fusion for Combining Visual and Textual Image Retrieval},

year={2010},

month={aug.},

volume={},

number={},

pages={1590 -1593},

keywords={ImageCLEF medical image retrieval;dark horse effect;information fusion algorithm;logarithmic rank penalization;maximum combination;multi-modality fusion;single modality fusion;stable normalization;textual image retrieval;visual image retrieval;image fusion;image retrieval;},

doi={10.1109/ICPR.2010.393},

ISSN={1051-4651},

}

Zkouší různé metody kombinování výsledků s late fusion strategií, porovnávají vliv na fusion

efekty - chorus, dark horse. maximum combinations, sum combinations, product of maximum and a non-zero number.

Various normalization strategies tried.

Moulin, Largeron, Gery: Impact of Visual Information on Text and Content Based Image Retrieval. 2010

plný text článku,

bibtex

@incollection {springerlink:10.1007/978-3-642-14980-1_15,

author = {Moulin, Christophe and Largeron, Christine and Géry, Mathias},

affiliation = {Université de Lyon, F-42023 Saint-Étienne, France},

title = {Impact of Visual Information on Text and Content Based Image Retrieval},

booktitle = {Structural, Syntactic, and Statistical Pattern Recognition},

series = {Lecture Notes in Computer Science},

editor = {Hancock, Edwin and Wilson, Richard and Windeatt, Terry and Ulusoy, Ilkay and Escolano, Francisco},

publisher = {Springer Berlin / Heidelberg},

isbn = {},

pages = {159-169},

volume = {6218},

url = {http://dx.doi.org/10.1007/978-3-642-14980-1_15},

note = {10.1007/978-3-642-14980-1_15},

year = {2010}

}

Late fusion of visual and text, weighted sum, zkoumají vliv vah. Součást ImageCLEF multimedia.

SIFT, bag of words. Z query objektu i db objektů extrahují text a visual deskriptory,

obojí počítají podle tf-idf.

Clinchant, Ah-Pine, Csurka: Semantic Combination of Textual and Visual Information in Multimedia Retrieval. ICMR 2011

plný text článku,

bibtex

@inproceedings{Clinchant:2011:SCT:1991996.1992040,

author = {Clinchant, St\'{e}phane and Ah-Pine, Julien and Csurka, Gabriela},

title = {Semantic combination of textual and visual information in multimedia retrieval},

booktitle = {Proceedings of the 1st ACM International Conference on Multimedia Retrieval},

series = {ICMR '11},

year = {2011},

isbn = {978-1-4503-0336-1},

location = {Trento, Italy},

pages = {44:1--44:8},

articleno = {44},

numpages = {8},

url = {http://doi.acm.org/10.1145/1991996.1992040},

doi = {http://doi.acm.org/10.1145/1991996.1992040},

acmid = {1992040},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {information fusion, multimedia retrieval},

}

"Image and textual queries are expressed at different semantic levels" - one should not combine them independently

as most information fusion techniques do. Ví se, že samotný text search funguje líp než samotný visual, taky se ví,

že kombinace pomáhá. Takže, jak je zkombinovat co nejlíp - manage the complementarities between image and text search?

Hezky vysvětlují rozdíl mezi early a late fusion. Dále uvádějí transmedia fusion: first use one of the modalities to

gather relevant documents and the swith to the other modality. In many experiments reported in the

literature, it has been shown that either late fusion or transmedia fusion approaches have been

performing better than early fusion techniques. Na druhou stranu, image reranking podle nějakého článku

z ImageCLEF horší než čistě text search. Nebude to tím, že se v ranking ignoruje text distance? Proto oni

navrhují combine image reranking with late fusion, tedy nejprve text searchem vybrat kandidáty,

pak rankovat podle text+visual. Pro předvýběr používají K=1000.

- There is a semantic mismatch between visual and textual

queries: image queries are ambiguous for humans from a

semantic viewpoint. Fusion techniques should be asymmetric since text and image are expressed at different semantic

levels.

- Visual techniques do work and are effective to retrieve visually similar images but they

usually fail in multimedia retrieval due to the semantic mismatch

Park, Nang: Content Based Web Image Retrieval System Using Both MPEG-7 Visual Descriptors and Textual Information. MMM 2007

plný text článku,

bibtex

@incollection {springerlink:10.1007/978-3-540-69423-6_64,

author = {Park, Joohyoun and Nang, Jongho},

affiliation = {Dept. of Computer Science and Engineering, Sogang University, 1, ShinsuDong, MapoGu, Seoul, 121-742 Korea},

title = {Content Based Web Image Retrieval System Using Both MPEG-7 Visual Descriptors and Textual Information},

booktitle = {Advances in Multimedia Modeling},

series = {Lecture Notes in Computer Science},

editor = {Cham, Tat-Jen and Cai, Jianfei and Dorai, Chitra and Rajan, Deepu and Chua, Tat-Seng and Chia, Liang-Tien},

publisher = {Springer Berlin / Heidelberg},

isbn = {},

pages = {659-669},

volume = {4351},

url = {http://dx.doi.org/10.1007/978-3-540-69423-6_64},

note = {10.1007/978-3-540-69423-6_64},

year = {2006}

}

Web search system komplet - stahování obrázků, indexace, hledání. Hledá podle text and visual, early fusion.

Text deskriptory získány ze stránky, potom přefiltrovány pomocí naučených asociací mezi visual concepts

a keywords (propojení pomocí WordNetu). Text&visual index - hierarchical bitmap index.

He, Xiong, Yang, Park: Using Multi-Modal Semantic Association Rules to fuse keywords and visual features automatically for Web image retrieval. Information Fusion 2011

plný text článku,

bibtex

@article{He2011223,

title = "Using Multi-Modal Semantic Association Rules to fuse keywords and visual features automatically for Web image retrieval",

journal = "Information Fusion",

volume = "12",

number = "3",

pages = "223 - 230",

year = "2011",

note = "Special Issue on Information Fusion in Future Generation Communication Environments",

issn = "1566-2535",

doi = "DOI: 10.1016/j.inffus.2010.02.001",

url = "http://www.sciencedirect.com/science/article/pii/S1566253510000230",

author = "Ruhan He and Naixue Xiong and Laurence T. Yang and Jong Hyuk Park",

keywords = "Web image retrieval",

keywords = "Multi-Modal Semantic Association Rule (MMSAR)",

keywords = "Association rule mining",

keywords = "Inverted file",

keywords = "Relevance Feedback (RF)"

}

Vyvíjejí VAST web image retrieval system. Hledají asociace mezi visual features a text.

Information fusion dělí na automatic a non-automatic, v automatic tyto kategorie: pseudo-relevance feedback,

online clustering, long-term RF learning. Odkazují nějaké analýzy chování uživatele - lazy user.

Jejich technologie - automatically fuse image and text using Multi-Modal Semantic Association Rule

- dává dohromady single keyword a několik visual features. Dost detailní popis tvorby asociací.

Pomocí získaných asociací se přerankují výsledky prvotního text-based vyhledávání.

Depeursinge, Müller: Fusion Techniques for Combining Textual and Visual Information Retrieval. Chapter 6 of ImageCLEF Experimental Evaluation in Visual Information Retrieval, Springer 2010

plný text článku,

bibtex

@incollection {springerlink:10.1007/978-3-642-15181-1_6,

author = {Depeursinge, Adrien and Müller, Henning},

affiliation = {University and University Hospitals of Geneva (HUG), Rue Gabrielle–Perret–Gentil 4, 1211 Geneva 14, Switzerland},

title = {Fusion Techniques for Combining Textual and Visual Information Retrieval},

booktitle = {ImageCLEF},

series = {The Kluwer International Series on Information Retrieval},

editor = {Croft, W. Bruce and Müller, Henning and Clough, Paul and Deselaers, Thomas and Caputo, Barbara},

publisher = {Springer Berlin Heidelberg},

isbn = {978-3-642-15181-1},

keyword = {Computer Science},

pages = {95-114},

volume = {32},

url = {http://dx.doi.org/10.1007/978-3-642-15181-1_6},

note = {10.1007/978-3-642-15181-1_6},

year = {2010}

}

Kategorizace přístupů k data fusion z článků na ImageCLEF za posledních 7 let. Identifikují tři základní přístupy

- inter-media query expansion, early fusion, late fusion (by far the most widely used). V early fusion uvažují

jednoduše concatenation, jako problém uvádějí curse of dimensionality. V late fusion se rozlišuje rank-based fusion a

score-based fusion, druhá varianta častější, vyžaduje normalizaci. Jedna možná implementace late fusion je intersection - uvedeno

několik variant, jak zadefinovat. Jiná varianta - reordering; odkazy na publikace o text search + visual reordering a naopak.

Detailně se popisuje několik metod kombinování - SUM a spol. Dále popisují text a visual query expansion.

Arampatzis, Zagoris, Chatzichristofis: Fusion vs. Two-Stage for Multimodal Retrieval. ECIR 2011

plný text článku,

bibtex

@incollection {springerlink:10.1007/978-3-642-20161-5_88,

author = {Arampatzis, Avi and Zagoris, Konstantinos and Chatzichristofis, Savvas},

affiliation = {Department of Electrical and Computer Engineering, Democritus University of Thrace, Xanthi, 67100 Greece},

title = {Fusion vs. Two-Stage for Multimodal Retrieval},

booktitle = {Advances in Information Retrieval},

series = {Lecture Notes in Computer Science},

editor = {Clough, Paul and Foley, Colum and Gurrin, Cathal and Jones, Gareth and Kraaij, Wessel and Lee, Hyowon and Mudoch, Vanessa},

publisher = {Springer Berlin / Heidelberg},

isbn = {},

pages = {759-762},

volume = {6611},

url = {http://dx.doi.org/10.1007/978-3-642-20161-5_88},

note = {10.1007/978-3-642-20161-5_88},

year = {2011}

}

Porovnávají late fusion text a visual search results s visual reordering z hlediska kvality, vyjde jim to celkem stejně.

Čas neměřili, ale v diskuzi uvádějí, že rankování je rychlejší, tudíž lepší. Porovnávali to na zhruba 200 000 objektech

z Wikipedie.

Kokar, Tomasik, Weyman: Formalizing classes of information fusion systems. Information Fusion 2004

plný text článku,

bibtex

@article{DBLP:journals/inffus/KokarTW04,

author = {Mieczyslaw M. Kokar and

Jerzy A. Tomasik and

Jerzy Weyman},

title = {Formalizing classes of information fusion systems},

journal = {Information Fusion},

volume = {5},

number = {3},

year = {2004},

pages = {189-202},

ee = {http://dx.doi.org/10.1016/j.inffus.2003.11.001},

bibsource = {DBLP, http://dblp.uni-trier.de}

}

Snaha o formální uchopení information fusion. Věnují se early a late fusion, ukazují, že decision fusion je

podtřídou data fusion. Formalismus zajímavý, používají pěkná schémata, asi by stálo za to někdy na to víc kouknout.

Fagin: Combining Fuzzy Information: an Overview. SIGMOD 2002

plný text článku,

bibtex

@article{Fagin:2002:CFI:565117.565143,

author = {Fagin, Ronald},

title = {Combining fuzzy information: an overview},

journal = {SIGMOD Rec.},

volume = {31},

issue = {2},

month = {June},

year = {2002},

issn = {0163-5808},

pages = {109--118},

numpages = {10},

url = {http://doi.acm.org/10.1145/565117.565143},

doi = {http://doi.acm.org/10.1145/565117.565143},

acmid = {565143},

publisher = {ACM},

address = {New York, NY, USA},

}

Naive algorithm, Fagin algorithm a Threshold algorithm pro vyhodnocování kombinovaných

dotazů nad systémem, který umí vracet uspořádané seznamy podle jednotlivých deskriptorů (dva druhy přístupu

k datům - sorted a random access, random dražší). Dokáže se,

že Threshol algorithm je optimální přesný algoritmus pro tento problém. Dále se diskutuje aproximativní

algoritmus, který skončí předčasně, když je dosaženo dané míry přesnosti.

- There is an obvious naive algorithm for obtaining the top k answers. Under sorted access, it looks at

every entry in each of the m sorted lists, computes (using t) the overall grade of every object, and returns

the top k answers. The naive algorithm has linear middleware cost (linear in the database size), and

thus is not efficient for a large database.

- Fagin algorithm:

- Do sorted access in parallel to each of the m sorted lists Li. (By “in parallel”, we mean that we

access the top member of each of the lists under sorted access, then we access the second member

of each of the lists, and so on.) Wait until there are at least k “matches”, that is, wait until there

is a set H of at least k objects such that each of these objects has been seen in each of the m lists.

- For each object R that has been seen, do random access as needed to each of the lists Li to find

the ith field xi of R.

- Compute the grade t(R) = t(x1, . . . , xm) for each object R that has been seen. Let Y be a set

containing the k objects that have been seen with the highest grades (ties are broken arbitrarily).

The output is then the graded set {(R, t(R)) |R ∈ Y }.

- Threshold algorithm:

- Do sorted access in parallel to each of the m sorted lists Li. As an object R is seen under sorted

access in some list, do random access to the other lists to find the grade xi of object R in every

list Li. Then compute the grade t(R) = t(x1, . . . , xm) of object R. If this grade is one of the k

highest we have seen, then remember object R and its grade t(R) (ties are broken arbitrarily, so

that only k objects and their grades need to be remembered at any time).

- For each list Li, let xi be the grade of the last object seen under sorted access. Define the threshold

value τ to be t(x1, . . . , xm). As soon as at least k objects have been seen whose grade is at least

equal to τ, then halt.

- Let Y be a set containing the k objects that have been seen with the highest grades. The output

is then the graded set {(R, t(R)) |R ∈ Y }.

- Aggregation function: FA and TA are correct for monotone aggregation functions t

(that is, the algorithm successfully finds the top k answers). An aggregation function t is strict

if t(x1, . . . , xm) = 1 holds precisely when xi = 1 for

every i. Thus, an aggregation function is strict if it takes on the maximal value of 1 precisely when each

argument takes on this maximal value. We would certainly expect an aggregation function representing

the conjunction to be strict. In fact, it is reasonable to think of strictness

as being a key characterizing feature of the conjunction.

Fagin: Combining Fuzzy Information from Multiple Systems. 1999

(plný text článku)

Jak kombinovat víc deskriptorů. Definují se potřebné vlastnosti agregační funkce,

navržen Fagin algorithm.

- Let us define an m-ary scoring function to be a function from [0, l]m to [0, 1]. For the sake of

generality, we will consider m-ary scoring functions for evaluating conjunctions of m atomic queries, although

in practice an m-ary conjunction is almost always evaluated by using an associative 2-ary function that

is iterated. Analogously to the binary case, we say that an m-ary scoring function t is monotone if

t(xl, ..., xm)≤t(x1', ..., xm') when xi ≤ xi' for every i. As discussed before, monotonicity is a reasonable

property to expect a scoring function to obey. Another such property is strictness: an m-ary scoring function t

is strict if t(xl, ..., xm) = 1 iff xi = 1 for every i. Thus, a scoring function is strict if it takes on the

maximal value of 1 precisely if each argument takes on this maximal value. Scoring functions considered

in the literature seem to be monotone and strict. In particular, scoring functions derived from “triangular

norms” are monotone and strict. Another important class of scoring functions include various

weighted and unweighed arithmetic and geometric means, which Thole, Zimmerman, and Zysno found to

perform empirically quite well. These are also monotone and strict.

Tung, Zhang, Koudas, Ooi: Similarity Search: A Matching Based Approach. VLDB 06.

(plný text článku)

Kombinované dotazy: zatímco obvykle se podobnost objektů určuje na základě kombinace fixní sady deskriptorů, článek navrhuje používat proměnlivou podmnožinu deskriptorů, a to ty, které jsou nejpodobnější (důvod - hlavně u vyšších dimenzí velká šance, že některý deskriptor "ustřelí", i když je objekt jinak velmi dobrý).

-

NN problem: distance between query object Q and data object over a FIXED set of features. Problems of this

approach - partial similarities undiscovered, distance affected by a few dimensions with high dissimilarity

(this phenomenon more obvious for high-dimensional data; in real applications - bad pixels, noise in signal, ...)

-

Model: object = multidimensional point; attributes sorted in each dimension,

cost is measured by the number of attributes retrieved; attributes sorted

with respect to their values, not distances to Q!

-

k-n-match problem: matching between Q and data objects in n dimensions, n < dimensionality d; these n dimensions

are determined dynamically to make Q and data objects returned match best

Definition: N-match difference: Given two d-dimensional points P (p1, p2, ..., pd) and

Q (q1, q2, ..., qd), let δi = |pi - qi|, i = 1, ..., d. Sort the array

δ1, ..., δd in increasing order and let the sorted array be

δ1' ,..., δd'. Then δn' is the n-match difference of point P

with regard to Q.

N-match difference is not a metric!

Definition: The n-match problem: Given a d-dimensional database DB, a query point Q and

an integer n (1 ≤ n ≤ d), find the point P ∈ DB that has

the smallest n-match difference with regard to Q. P is called

the n-match point of Q.

Definition: The k-n-match problem: Given a d-dimensional database DB of cardinality c, a query

point Q, an integer n (1 ≤ n ≤ d), and an integer k ≤ c,

find a set S which consists of k points from DB so that for

any point P1 ∈ S and any point P2 ∈ DB - S, the n-match

difference between P1 and Q is less than or equal to the n-

match difference between P2 and Q. The S is the k-n-match

set of Q.

-

frequent k-n-match: finds a set of objects that appears in the k-n-match answers

most frequently for a range of values of n

The frequent k-n-match problem:

Given a d-dimensional database DB of cardinality c, a query

point Q, an integer k ≤ c, and an integer range [n0; n1]

within [1; d], let S0, ..., Si be the answer sets of k-n0-match,

..., k-n1-match, respectively. Find a set T of k points, so

that for any point P1 ∈ T and any point P2 ∈ DB - T, P1’s

number of appearances in S0, ..., Si is larger than or equal

to P2’s number of appearances in S0, ..., Si.

- Algorithm for (frequent) k-n-match problem: FA doesn't work

as the aggregation function used in k-n-match is not monotone.

AD algorithm for k-n-match Search: We first locate each dimension of the query Q in

the d sorted lists. Then we retrieve the individual attributes

in ascending order of their differences to the corresponding

attributes of Q. When a point ID is first seen n times, this

point is the first n-match. We keep retrieving the attributes

until k point ID’s have been seen at least n times. Then we

can stop. We call this strategy of accessing the attributes

in Ascending order of their Differences to the query point’s

attributes as the AD algorithm.

- Effectivity evaluation:

- To validate the statement that the traditional kNN query

leaves many partial similarities uncovered, we first use a

image database to visually show this fact.

- In order to evaluate effctiveness from a (statistically) quantitative view, we use the class stripping technique, which is described as follows. We use five real data sets with dimensionalities varying from 4 to 34 ... Each record has an additional variable indicating which class it belongs to. By the class stripping technique, we strip this class tag from each point and use different techniques to find the similar objects to the query objects. If the answer and the query belong to the same class, then the answer is correct. The more correct ones in the returned answers, statistically, the better the quality of the similarity searching method.

Nápady: mohlo by se použít pro přerankování výsledků Combined Query, ale problémy:

ignoruje se agregační funkce - pro sumu to asi jde vyřešit normováním jednotlivých sčítanců?

Manning, Raghavan, Schutze: Introduction to Information Retrieval. Cambridge University Press. 2008.

HTML version,

bibtex

@book{IRbook08,

author = {Christopher D. Manning and

Prabhakar Raghavan and

Hinrich Sch{\"u}tze},

title = {Introduction to information retrieval},

publisher = {Cambridge University Press},

year = {2008},

isbn = {978-0-521-86571-5},

pages = {I-XXI, 1-482},

bibsource = {DBLP, http://dblp.uni-trier.de}

}

Obsahuje dobrou kapitolu o evaluačních metodách - co je vhodné pro rankované výsledky,

neúplnou ground truth.

Järvelin, Kekäläinen: Cumulated gain-based evaluation of IR techniques. ACM Trans. Inf. Syst., 2002

plný text článku,

bibtex

@article{DBLP:journals/tois/JarvelinK02,

author = {Kalervo J{\"a}rvelin and

Jaana Kek{\"a}l{\"a}inen},

title = {Cumulated gain-based evaluation of IR techniques},

journal = {ACM Trans. Inf. Syst.},

volume = {20},

number = {4},

year = {2002},

pages = {422-446},

ee = {http://doi.acm.org/10.1145/582415.582418},

bibsource = {DBLP, http://dblp.uni-trier.de}

}

Popisuje metrik Cumulated Gain, Discounted Cumulated Gain, Normalized DCG. Vhodné pro rankované

výsledky, poradí si i s neúplnou GT.

Nowak, Lukashevich, Dunker, Rueger: Performance Measures for Multilabel Evaluation. MIR 2010

plný text článku

Popis a porovnání různých performance measures - Precision, Recall, Mean

Average Precision a další. Concept-based comparison, example-based, hierarchical, ontology-based.

Srovnávají to na datech z ImageCLEF, doporučení co používat (používá se teď pro ImageCLEF) - MAP,

F-measure.

Fagin, Kumar, Sivakumar: Comparing Top k Lists. 2003

(plný text článku)

Rozebírá standardní možnosti porovnávání uspořádaných seznamů, navrhuje nové pro

potřeby porovnávání top-k výsledků (tady se musí uvažovat, že neznáme uspořádání celé domény). Hodně teoreticky

rozebrané, řazení měřicích funkcí do tříd, zkoumání metrických vlastností.