Distributed environment

Engaging multiple processors is a very old way to speed up calculation. While in the 1980s the focus was primarily on parallel computers, since the second half of the 1990s, the interest has clearly shifted to distributed systems using the features of an increasingly powerful Internet. At this time, the performance of commonly available computers (workstations) reached values previously reserved for supercomputers. The combination of such systems has created clusters where nodes are connected by a conventional high throughput network (typically Ethernet). As the bandwidth in the Internet exceeded the parameters of local networks, it became effective to connect the remote cluster to increasingly powerful units. Grids, computational distributed systems, have been created, linking tens of thousands of nodes around the world.

Further interest in the combined environment of parallel and distributed systems is emerging in the context of the practical stoppage of further processor performance. Moore's law no longer applies to a particular processor, but their groups - multi-core processors, even common workstations are being deployed with more than one processor. The individual cluster nodes are no longer made up of a single processor, but now 8-16 cores, and this number is increasing. An increasingly complex distributed environment is emerging, which, on the one hand, offers unprecedented computing power, but on the other hand, its efficient use is a problem that IT has still not satisfactorily solved.

A relatively simple situation exists in the case of so-called "surprisingly parallel tasks" (embarrassingly parallel jobs), where the solved task can be divided into a huge number of completely independent calculations. An example is SETI @ Home , a program to search for extraterrestrial civilizations by analyzing signals received by radio telescopes. Signals are huge, but they can be processed completely independently. More than a million stations can be involved in the calculations, together providing huge computing power.

However, in the case of "common" algorithms, parallelization is much more difficult, and for computing it is necessary to create a suitable environment that allows distributed computing. In this context, we have long been dealing with the issue of building a universally usable distributed environment, as well as with specific ways of using it.

The practical aspects of building a distributed environment and related primarily development issues are solved by CESNET in cooperation with us. In particular, we deal with the following areas at FI and ICS:

-

Distributed security , with a special focus on authentication methods, collaboration of various authentication mechanisms suitable for large distributed environments, federated authentication approaches, authorization basics, and more.

-

Distributed storage as a necessary condition for a usable distributed environment.

-

Distributed computing virtualization .

-

Distributed computing , support for specific applications, especially

-

Haptic interactions representing a computationally extremely demanding task of quickly calculating deformations of complex objects. Haptic interaction requires thousands of calculations of the deformed object coordinates per second, which can be handled either by large-scale off-line calculations, on-line distributed subspace computations using accelerators - we use fast graphics processors (nVidia, CUDA architectures) and TESLA).

-

Distributed multimedia processing for fast transcoding as well as long video recordings (eg lectures) using decomposition, parallel distributed processing, and subsequent composition of recoded recordings. Solved within the Distributed Encoding Environment (DEE) project. Due to the distribution of the calculation, it takes about 8 minutes to process one two-hour lecture, and it would take about one hour on one machine.

-

Safety

In particular, we focus on authentication methods using different identification data and mechanisms such as Kerberos, digital certificates, common and one-time passwords, and possible transformations between mechanisms. We also focus on using Shibboleth and SAML based identity federations and integrating them into distributed applications. An example of such applications is a pathological atlas that allows access from several academic federations in Europe or an online certification authority whose certificates containing additional user attributes form the basis of our secure videoconferencing solution.

Since many current systems use digital certificates, we are addressing PKI deployments in a widely distributed environment and PKI issues that are not seen on a small scale. We are addressing options for simplifying digital certificate acquisition and, for example, linking to institutional user management systems. E.g. developed connection to the authentication mechanism in the University Study Room will allow transparent access to secured MU sites. Some of the aspects we have recently discussed include reliably distributing a list of revoked certificates or supporting smart cards in distributed systems.

Distributed storage

Sufficient disk capacity is increasingly becoming one of the main assessment factors for a new computer. The ability to count, from which computers also have a name, is becoming increasingly secondary to the growth of processor performance, and data and processing options are the first. An analogous trend is also observed in the area of large distributed systems, where the possibilities of new ways of using disk capacity of systems interconnected by computer networks have been explored in recent years.

Distributed data storage brings with it a number of interesting theoretical issues. A number of distributed storage systems are currently being developed, indicating that distributed storage is of great interest and that it is not easy to create sufficiently versatile distributed storage. Our current research focuses on:

-

Distributed storage usable as home users of many users

In this area, traditional systems such as AFS or NFS have proven to be highly inadequate. -

Distributed storage for fast swap space

New and new solutions have emerged in this area recently, but none of our requirements (robustness, safety, speed) are covered. -

Robust hierarchical high-capacity distributed storage

Grid repositories such as D-cache move in this area, but they do not cover all areas of distributed storage research.

One of our first distributed data storage projects was the DiDaS ( Distributed Data Warehouse ) project in 2003, in which we built a data storage network in large cities in the Czech Republic. The pilot application using the results of the DiDaS project is distributed lecture processing (within the DEE project, which illustrates the interconnection of distributed storage and distributed computing). This application is still in use today, has processed hundreds of hours of lectures from the Faculty of Informatics, and has flown several hundred terabytes of data.

The distributed data storage project was the PADS ( Distributed Warehouse Logs and Applications ) project in 2006. As part of this project, we have devoted ourselves to a neglected topic - data security in distributed repositories. The design and implementation of the authentication and authorization system in a distributed environment was created, which we consider to be a relatively unique solution.

Currently, we are dedicated to deploying NFSv4 for home cluster users' home directories. We also support IPv6 support and keyring support for NFSv4. We explore systems like Luster FS, PVFS2 or Glusterfs for fast network swapping. At the university, we explore and develop the possibilities of providing network storage capacity at the Institute of Computer Science for the entire university.

Virtualization

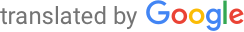

Delivering virtual machines to users, instead of running tasks and physical nodes in a traditional way, delivers a new quality of service, especially in supporting interactive tasks, pre-emption, and the ability to manage such infrastructure.

In traditional computing systems, the administrator installs and manages the operating system and applications. Users enter their computational tasks into the task scheduler and have little control over task execution. By virtualizing the computers in the cluster, an additional "level of indirectness" is introduced, separating the operating system installation from the physical machine.

Users can thus get significantly greater resource management rights. They can use their own operating system installations (and have administrator privileges at runtime), install their own applications, use their own task scheduler in a virtual cluster.

This leads to a significant change in the management of such infrastructure. In particular, we deal with, but are not limited to, the following issues.

- Resource Planning for Virtual Machines. Planning granularity has changed from the task of starting up computers.

- Safety. Users cannot be allowed direct access to the network with the infrastructure provider's identity if they have administrator rights to computers on that network.

- Network support. Virtual clusters may need to be encapsulated in virtual networks despite extensive physical networks, addressing user access to encapsulated networks, accessing external data sources and services.

- Virtual Machine Control. Virtual machines can coexist on a shared physical infrastructure, need to manage their life cycle and access resources.

Haptic interactions

An interesting area of research in virtual reality is the haptic interaction with deformable objects. A user equipped with a haptic device with a backward force coupling using a probe (test object) interacts with a virtual object that changes its shape according to the probe's position.

One of the applications of these models is the so-called surgical simulators, which allow you to perform virtual operations, which can be used for training future surgeons, planning complex procedures on a particular patient model or practicing operations in non-standard conditions (eg outside the gravitational field).

The basic requirement for these simulators is primarily the realistic behavior of the simulated objects, which requires a physical formulation of the problem. However, the simulation is computationally demanding in this case because it usually leads to the solution of large systems of nonlinear equations. On the other hand, realistic haptic interaction requires a high refresh rate (more than 1kHz), so the calculation is not feasible in real time. Within the framework of research at the Faculty of Informatics, we deal with the design of algorithms that will enable to combine haptic interaction with computationally demanding nonlinear models. Algorithms are based on the pre-computation of the state space, which is then interpolated during the interaction itself. The pre-calculation is carried out in a distributed environment, leading to a significant reduction in the waiting time for the pre-calculated data.

We are also currently addressing a modification of this approach whereby the state space required for interpolation is generated directly during interaction, thereby eliminating the pre-computation phase, but with greater demands on the speed of the solution. To achieve these goals, we are currently testing the latest technologies such as low-latency networks (Infiniband) and GPU-accelerated calculations. In the future, we plan to design more complex models that allow cutting and tearing, for example.

Information for students

The issue of distributed computing and distributed data storage offers a number of very interesting research topics, a number of bachelor's and master's theses have been created here. However, we are still looking for candidates who would like to contribute their new ideas and ideas. Anyone interested in participating in the activities of our group can participate either in the form of a bachelor's or master's thesis, in the form of a doctoral study, or by a simple external cooperation on some of our projects. In the IS MU it is possible to find references to a number of free bachelor and master theses led by individual members of the research team; on the basis of a personal agreement it is also possible to propose new assignments from the research areas of the group.

International and national cooperation

Our group works closely with a number of international institutions, the most important of which are:

- University of Tennessee, Knoxville , USA

- Louisiana State University, Center for Computational Technologies USA

- BLACK , Switzerland

- INFN , Italy

Our group is involved in a number of international projects;

Our main partner at the national level is the association

CESNET zspo , Zikova 4, Prague

team staff also include employees

Institute of Computer Science HIM.

Current grant projects

- Highly Parallel and Distributed Computing Systems , 2005-2011, research plan no. 0021622419, Ministry of Education, Youth and Sports of the Czech Republic

- MUv6

- CAT

Research team from MU

Group leader:

Luděk Matyska

Employees:

David Antoš

Lukáš Hejtmánek

Daniel Kouřil

Aleš Křenek

Miroslav Ruda

PhD students:

Igor Peterlík ,

Jiří Filipovič ,

Daniel Kouřil ,

Michal Procházka ,

Aleš Červenka

Jana Šimková Bc. and Mgr. students:

Jana Šimková Bc. Jan Fousek ,

Jana Šimková Bc. Jiří Sedláček

Contact

doc. Prof. RNDr. Ludek Matyska, CSc.

ludek8EqmveNkp@icsXT2CaT8ZZ.munifNWoUumLF.cz